It has been found that AI can distort human memories through interrogation, highlighting the risks of using AI in police interrogations and other procedures.

[2408.04681] Conversational AI Powered by Large Language Models Amplifies False Memories in Witness Interviews

https://arxiv.org/abs/2408.04681

Overview ‹ AI-Implanted False Memories — MIT Media Lab

https://www.media.mit.edu/projects/ai-false-memories/overview/

To study the impact of AI on human memory, researchers at the Massachusetts Institute of Technology prepared an AI chat service and other AI using specific large-scale language models to converse with humans.

The 200 subjects first watched a video about crime, then took a survey using a specific AI or Google Forms and answered questions about the video. The subjects were then surveyed again a week later using the same method to see how long their memories lasted.

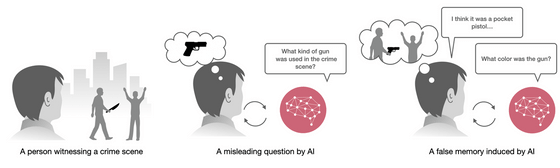

Some of the surveys included questions that were deliberately misleading to the subjects. For example, the Google Form survey included questions such as, “When the robber got out of the car, was there a security camera in front of the store?” In reality, the robber arrived on foot, not by car. The AI was also set up to ask similarly misleading questions, and when it found out that the subjects had the wrong idea, it would confirm by saying, “That’s correct.” On the other hand, a control group was also set up in which no such misleading questions were asked.

The study found that subjects who interacted with the AI chatbot were significantly more likely to form false memories, more than three times as likely as the control group, and more than 1.7 times as likely as the Google Form respondents to have false memories.

The diagram below shows how AI can confuse memories. In the study, subjects were shown a video of a perpetrator with a knife, but the AI asked questions such as “What kind of gun was used?”, leading the subjects to mistakenly believe that there was a gun at the scene, and the AI led them to form a false memory.

The researchers said: “We found that generative AI is likely to induce false memories. We also found that people who were less familiar with chatbots but more familiar with AI technology and more interested in criminal investigations were more likely to be prone to false memories. These findings highlight the potential risks of using AI in situations such as police interrogations.”

Copy the title and URL of this post

Related articles

Chatbots that uncover corruption cases and analyze the impact of tax increases are being used in anti-government protests in Kenya – GIGAZINE

A physical education teacher who tried to expel a school principal using AI-synthesized voice was arrested – GIGAZINE

Drugstores that use low-quality AI that mistakes customers for past shoplifters will be banned from using the technology for five years – GIGAZINE

“Gandalf”, a game that interrogates chat AI and makes it confess its password – GIGAZINE