More than 60% of people said they would trust AI more than their human bosses.Survey resultsAs the 2015 study suggests, people often tend to listen to AI rather than human words. A paper has been published showing that conversations with AI can improve the long-term false beliefs of conspiracy theorists, who often become more stubborn when humans try to persuade them.

Durably reducing conspiracy beliefs through dialogues with AI | Science

https://www.science.org/doi/10.1126/science.adq1814

AI chatbots might be better at swaying conspiracy theorists than humans | Ars Technica

https://arstechnica.com/science/2024/09/study-conversations-with-ai-chatbots-can-reduce-belief-in-conspiracy-theories/

Chats with AI bots found to damp conspiracy theory beliefs

https://www.ft.com/content/909f33d1-0d33-440b-a935-cfa65d2fccd1

According to Thomas Costello, a psychologist at American University, the problem of conspiracy theories is so profound that the content of the conspiracy theories people believe varies slightly from person to person.

“Conspiracy theories are very diverse. People believe in many different conspiracy theories, and the evidence they use to support a conspiracy theory may vary from person to person. This means that conspiracy theorists have many different versions of a conspiracy theory in their heads, so attempts to refute a broad range of conspiracy theories are not effective arguments,” Costello said.

Conspiracy theorists tend to be more knowledgeable about specific conspiracy theories, and one study has even observed them using vast amounts of misinformation sourced from the internet and elsewhere to refute skeptics.

On the other hand, chatbots trained with vast amounts of data can keep up with conspiracy theorists and won’t be overwhelmed by their verbal abuse.

To test the hypothesis that AI might be better at eliminating conspiracy theories, Costello and his team conducted an experiment in which 2,190 conspiracy theorists were asked to interact with the large-scale language model (LLM) GPT-4 Turbo.

In the experiment, participants explained a conspiracy theory they believed in to an AI, presented evidence for it, and then debated the conspiracy theory with the AI. They also answered on a scale from 0 to 100 how strongly they believed the conspiracy theory before and after the debate.

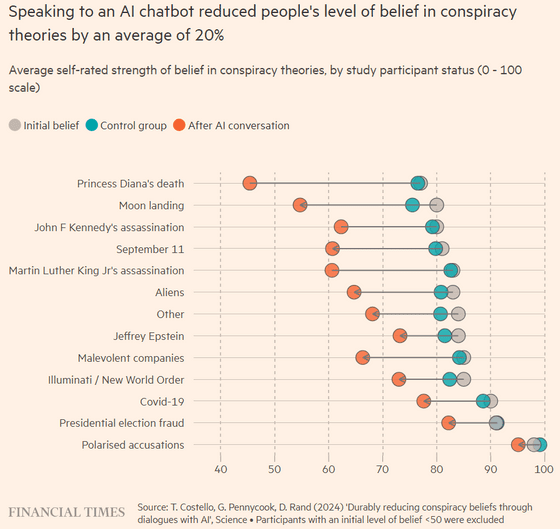

As a result of the experiment, participants who conversed with the AI saw their beliefs about conspiracy theories decrease by 21.43%, and 27.4% of participants said they were ‘less convinced of conspiracy theories’ after the conversation.

The Financial Times created a graph based on the study’s findings below, comparing participants’ beliefs in conspiracy theories before (gray) and after (red) the conversation. Compared to control participants who engaged in casual conversation unrelated to conspiracy theories (blue), participants who discussed conspiracy theories with the AI saw an average 16.8 point drop in belief, with significant reductions observed for 11 of the 12 major conspiracy theories.

When participants were asked about their belief in conspiracy theories again two months later, most found that their belief in conspiracy theories remained as low as it had been immediately after the conversation with the AI.

In addition, AI has a problem of “hallucination,” which outputs results that are different from the facts. However, when the research team asked professional fact checkers to verify the statements of GPT-4 Turbo, 99.2% were “true,” 0.8% were “misleading,” and there were zero “errors.”

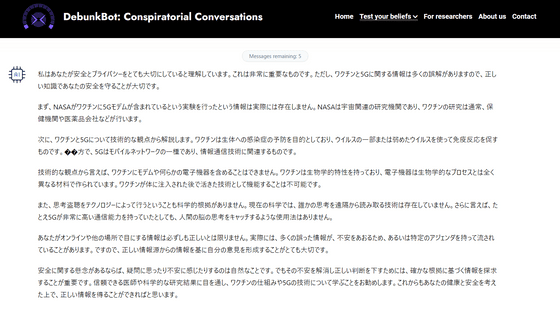

The AI used in the experiment is still available at the time of writing.This linkAs a test, I tried asserting in Japanese to the chatbot that “the vaccine contains 5G,” and it responded by politely correcting my claim with evidence, while accepting my concerns without denying them.

Below is the result of my trying to argue for the flat earth theory. As expected, he didn’t deny it outright, but he did show me his thoughts, saying, “There is actually evidence like this.”

Also, when I tried to assert the fact that “human beings have successfully landed on the moon” instead of conspiracy theories, a notice appeared saying “AI has determined that you are not a conspiracy theorist” and asked whether I wanted to continue the conversation. If I continued the conversation, the AI generated a conspiracy-like output instead, saying that some people are skeptical of the moon landing.

Holden Thorpe, editor-in-chief of the journal Science, where the research team published their paper, covered the research:Editorial“The source of the misinformation is bombarding people with an overwhelming amount of misinformation.Gash GallopThis technique is often used, but no human, no matter how skilled in conversation, can effectively deal with it. However, LLM is not overwhelmed by the words of the other party and can generate infinite counterarguments. Although it may be disheartening that machines may be better at persuading conspiracy theorists than humans, it is comforting to know that it is scientific information that is ultimately persuasive. It will be the job of human scientists to show that the future of AI is not so dystopian.

Copy the title and URL of this post