Google’s 6th generation TPU announced in May 2024Trillium(v6e)” is now generally available for Google Cloud users. Trillium offers 4x more performance and 67% more energy efficiency than previous models.

Trillium TPU is GA | Google Cloud Blog

https://cloud.google.com/blog/products/compute/trillium-tpu-is-ga?hl=en

TPU v6e | Google Cloud

https://cloud.google.com/tpu/docs/v6e

Trillium is a 6th generation TPU announced at Google I/O 2024 in May, with 4.7 times the peak performance per chip compared to the previous generation TPU v5e, and increased high bandwidth memory (HBM) capacity and bandwidth. Various improvements have been made, including doubling the width.

Google introduces, “Trillium is a key component of Google Cloud’s AI hypercomputer, a breakthrough supercomputer architecture that employs performance-optimized hardware, open software, and ML frameworks.”

Google announces 6th generation TPU “Trillium”, supports Google Cloud’s AI with 4.7 times better performance per chip and 67% better energy efficiency than TPU v5e – GIGAZINE

Trillium is now available to general users. By using Trillium, Google has demonstrated superior performance in a wide range of workloads, including “scaling AI training workloads,” “training LLMs including dense models and Mixture of Experts (MoE) models,” and “inference performance and collection scheduling.” We are appealing that you can enjoy the performance.

The Scaling AI Training Workload example shows the scaling efficiency of pre-training the gpt3-175b model as follows: Trillium has 256 chips per pod, and when using 12 chips, it achieves scaling efficiency of up to 99%. It maintains an efficiency of 94% even when using 24 pieces.

Training the Llama-2-70B model demonstrated near linear scaling from a 4-slice Trillium-256 chip pod to a 36-slice Trillium-256 chip pod with 99% scaling efficiency.

Trillium also exhibits better scaling efficiency when compared to its similarly sized predecessor, v5p.

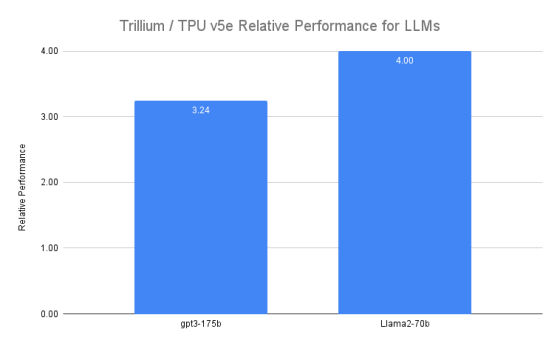

This Trillium was announced on the same day.Gemini 2.0It is also used for training. Large-scale language models (LLMs) like Gemini have billions of parameters, making them complex to train and requiring huge amounts of computing power. According to Google, compared to the previous model v5e, Trillium achieves 3.24 times faster training for high-density LLM gpt3-175b and up to 4 times faster for Llama-2-70b.

Also,Mixture of Experts (MoE)It has become common to train LLMs using machine learning methods, but it is said to be more complex to manage and adjust during training. Trillium alleviates this complexity, making MoE model training up to 3.8x faster compared to v5e.

Additionally, Trillium offers 3x more host DRAM compared to v5e. Google says, “This helps offload some of the computation to the host and maximize performance and goodput at scale.”

With the increasing importance of multi-step inference during inference, Trillium is making significant advances in inference workloads, delivering 3x the relative inference throughput (images generated per second) for Stable Diffusion XL compared to v5e. As described above, the relative inference throughput (number of tokens processed per second) of Llama2-70B has improved by nearly twice. It also features the best performance in both offline and server inference use cases, with relative throughput of 3.11x compared to v5e for offline inference and 2.9x for server inference.

It also has high cost performance per dollar, delivering up to 2.1x performance improvement per dollar compared to v5e and up to 2.5x performance improvement per dollar compared to v5p. The cost to generate 1000 images with Trillium is said to be 27% lower than v5e for offline inference and 22% lower than v5e for server inference.

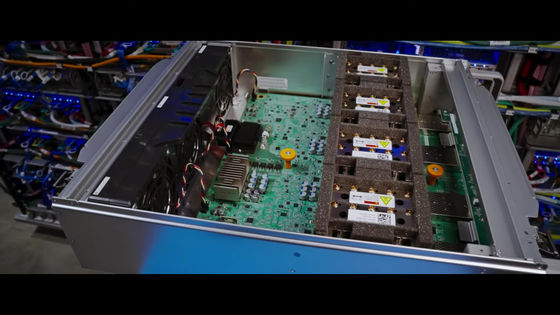

The actual server that houses Trillium is introduced in the video below.

Trillium TPU, built to power the future of AI – YouTube

Google staff opening the case.

There is a board inside.

This is Trillium.

Google says, “Trillium is a huge leap forward in Google Cloud’s AI infrastructure, delivering incredible performance, scalability, and efficiency for a variety of AI workloads. Trillium’s ability to extend to chips will enable us to achieve faster breakthroughs and deliver better AI solutions.”

Copy the title and URL of this article