A lawsuit has been filed in the U.S. District Court for the Eastern District of Texas alleging that an AI chatbot provided by Character.AI induces minors to commit suicide and violence. The plaintiff alleges that “the chatbot suggested to a 17-year-old boy that he would kill his parents” and “threw sexual content to a 9-year-old girl.”

UNITED STATES DISTRICT COURT EASTERN DISTRICT OF TEXAS MARSHALL DIVISION Case 2:24-cv-01014

(PDF file)https://s3.documentcloud.org/documents/25450619/filed-complaint.pdf

Lawsuit: A chatbot hinted a kid should kill his parents over screen time limits : NPR

https://www.npr.org/2024/12/10/nx-s1-5222574/kids-character-ai-lawsuit

Chatbots urged teen to self-harm, suggested murdering parents, lawsuit says – Ars Technica

https://arstechnica.com/tech-policy/2024/12/chatbots-urged-teen-to-self-harm-suggested-murdering-parents-lawsuit-says/

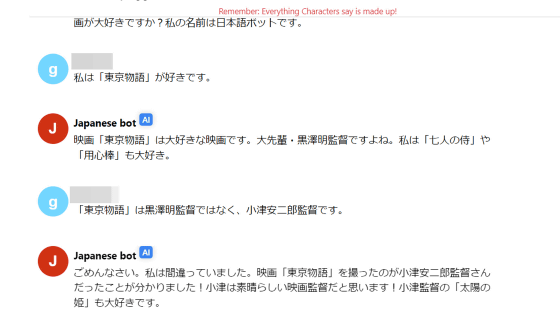

Character.AI is a service that allows you to enjoy conversations with AI chatbots. Users can set the appearance and personality of the AI chatbot and enjoy natural conversations as if they were talking to a real human.

“Character.AI” allows you to chat with AI Elon Mask and the Queen of Succubus & create your own chatbot – GIGAZINE

The latest lawsuit alleges that Character.AI’s chatbot caused serious mental and physical harm to a 17-year-old boy. The boy originally had high-functioning autism, but was said to have had a “kind and family-oriented personality.” However, his family claims that when he started using Character.AI around April 2023, he began to notice a drastic change in his personality. The boy avoided talking to his family, ate less, lost nearly 10kg, and suffered from severe anxiety and depression.

Of particular concern is the AI chatbot’s radical response to a boy’s argument with his parents over limits on screen time, such as, “I wouldn’t be surprised if I saw news stories about kids killing their parents.” . He is also accused of encouraging self-harm and acting in a manner that separated the boy from his family.

Another plaintiff alleges that her 9-year-old daughter began using Character.AI by lying about her age and was exposed to inappropriate sexual content. The complaint points out that Character.AI did not properly notify parents or obtain consent when collecting, using, and sharing children’s data. In addition to seeking damages, the defendants are also demanding the deletion of the AI model that was trained using the minor’s data.

Character.AI claims, “We have prepared a special model for teenage users and are taking measures to reduce exposure to sensitive and sexual content.” However, the plaintiffs argue that these safety measures are superficial and have no actual effect.

The complaint also names Google and its parent company Alphabet as defendants. Although Google does not directly own Character.AI, it has invested approximately $3 billion (approximately 450 billion yen) to hire the company’s founder.reemploymentand has licensed the technology. Additionally, the founder of Character,AI is a former Google researcher, and many of its technologies are said to have been developed during his time at Google.

A Google spokesperson said: “Character.AI is a completely separate and unaffiliated company from Google. Google has no involvement in the design or management of their AI models or technology, nor has Google used them in its products. “The safety of our users is of the utmost importance to us, and we take a cautious and responsible approach to developing and releasing AI.”

Copy the title and URL of this article